Rendezvous hashing

Rendezvous or highest random weight (HRW) hashing[1][2] is an algorithm that allows clients to achieve distributed agreement on a set of k options out of a possible set of n options. A typical application is when clients need to agree on which sites (or proxies) objects are assigned to. When k is 1, it subsumes the goals of consistent hashing, using an entirely different method.

History

Rendezvous hashing was invented in 1996 by David Thaler and Chinya Ravishankar at the University of Michigan. Consistent hashing appears to have been devised around the same time at MIT. One of the first applications of rendezvous hashing was to enable multicast clients on the Internet (in contexts such as the MBONE) to identify multicast rendezvous points in a distributed fashion.[3][4] It was used in 1998 by Microsoft's Cache Array Routing Protocol (CARP) for distributed cache coordination and routing.[5][6] Some Protocol Independent Multicast routing protocols use rendezvous hashing to pick a rendezvous point.[1]

Given its simplicity and generality, rendezvous hashing has been applied in a wide variety of applications, including mobile caching,[7] router design,[8] secure key establishment,[9] and sharding and distributed databases.[10]

The HRW algorithm for rendezvous hashing

Rendezvous hashing solves the distributed hash table problem: How can a set of clients, given an object O, agree on where in a set of n sites (servers, say) to place O? Each client is to select a site independently, but all clients must end up picking the same site. This is non-trivial if we add a minimal disruption constraint, and require that only objects mapping to the removed site may be reassigned to other sites.

The basic idea is to give each site Sj a score (a weight) for each object Oi, and assign the object to the highest scoring site. All clients first agree on a hash function h(). For object Oi, the site Sj is defined to have weight wi,j = h(Oi, Sj). HRW assigns Oi to the site Sm whose weight wi,m is the largest. Since h() is agreed upon, each client can independently compute the weights wi,1, wi,2, ..., wi,n and pick the largest. If the goal is distributed k-agreement, the clients can independently pick the sites with the k largest hash values.

If a site S is added or removed, only the objects mapping to S are remapped to different sites, satisfying the minimal disruption constraint above. The HRW assignment can be computed independently by any client, since it depends only on the identifiers for the set of sites S1, S2, ..., Sn and the object being assigned.

HRW easily accommodates different capacities among sites. If site Sk has twice the capacity of the other sites, we simply represent Sk twice in the list, say, as Sk,1 and Sk,2. Clearly, twice as many objects will now map to Sk as to the other sites.

Properties

It might first appear sufficient to treat the n sites as buckets in a hash table and hash the object name O into this table. However, if any of the sites fails or is unreachable, the hash table size changes, requiring all objects to be remapped. This massive disruption makes such direct hashing unworkable. Under rendezvous hashing, however, clients handle site failures by picking the site that yields the next largest weight. Remapping is required only for objects currently mapped to the failed site, and as proved in,[1][2] disruption is minimal. Rendezvous hashing has the following properties.

- Low overhead: The hash function used is efficient, so overhead at the clients is very low.

- Load balancing: Since the hash function is randomizing, each of the n sites is equally likely to receive the object O. Loads are uniform across the sites.

- Site capacity: Sites with different capacities can be represented in the site list with multiplicity in proportion to capacity. A site with twice the capacity of the other sites will be represented twice in the list, while every other site is represented once.

- High hit rate: Since all clients agree on placing an object O into the same site SO , each fetch or placement of O into SO yields the maximum utility in terms of hit rate. The object O will always be found unless it is evicted by some replacement algorithm at SO .

- Minimal disruption: When a site fails, only the objects mapped to that site need to be remapped. Disruption is at the minimal possible level, as proved in.[1][2]

- Distributed k-agreement: Clients can reach distributed agreement on k sites simply by selecting the top k sites in the ordering, as in.[9]

Comparison with consistent hashing

Consistent hashing operates by mapping sites uniformly and randomly to points on a unit circle called tokens. Objects are also mapped to the unit circle and placed in the site whose token is the first encountered traveling clockwise from the object's location. When a site is removed, the objects it owns are transferred to the site owning the next token encountered moving clockwise. Provided each site is mapped to a large number (100-200, say) of tokens this will reassign objects in a relatively uniform fashion among the remaining sites.

If sites are mapped to points on the circle randomly by hashing 200 variants of the site ID, say, the assignment of any object requires storing or recalculating 200 hash values for each site. However, the tokens associated with a given site can be precomputed and stored in a sorted list, requiring only a single application of the hash function to the object, and a binary search to compute the assignment. Even with many tokens per site, however, the basic version of consistent hashing may not balance objects uniformly over sites, since when a site is removed each object assigned to it is distributed only over as many other sites as the site has tokens (say 100-200).

In contrast, rendezvous hashing (HRW) distributes objects uniformly over all sites, given a uniform hash function. Unlike consistent hashing, HRW requires no precomputing or storage of tokens. An object Oi is placed into one of n sites S1, ..., Sn by computing the n hash values h(Oi,Sj) and picking the site Sk that yields the highest hash value. If a new site Sn+1 is added, new object placements or requests will compute n+1 hash values, and pick the largest of these. If an object already in the system at Sk maps to this new site Sn+1, it will be fetched afresh and cached at Sn+1. All clients will henceforth obtain it from this site, and the old cached copy at Sk will ultimately be replaced by the local cache management algorithm. If Sk is taken offline, its objects will be remapped uniformly to the remaining n-1 sites.

Variants of consistent hashing (such as Amazon's Dynamo[11]) that use more complex logic to distribute tokens on the unit circle offer better load balancing, further reduce the overhead of adding new sites, and reduce metadata overhead while offering other benefits. Complexity increases, however. Variants of the HRW algorithm, such as the use of a skeleton (see below), can reduce the O(n) time for object location to O(log(n)), at the cost of less global uniformity of placement. When n is not too large, however, the O(n) placement cost of basic HRW is not likely to be a problem. HRW completely avoids all the overhead and complexity associated with correctly handling multiple tokens for each site and associated metadata.

Rendezvous hashing also has the great advantage that it provides simple solutions to other important problems, such as distributed k-agreement.

Reducing consistent hashing to rendezvous hashing

Consistent hashing can be reduced to an instance of HRW by an appropriate choice of a two-place hash function. From the site identifier the simplest version of consistent hashing computes a list of token positions, e.g., where hashes values to locations on the unit circle. Define the two place hash function to be where denotes the distance along the unit circle from to (since has some minimal non-zero value there is no problem translating this value to a unique integer in some bounded range). This will duplicate exactly the assignment produced by consistent hashing.

It is not possible, however, to reduce HRW to consistent hashing (assuming the number of tokens per site is bounded), since HRW potentially reassigns the objects from a removed site to an unbounded number of other sites.

Implementation

Implementation is straightforward once a hash function h() is chosen (the original work on the HRW method[1][2] makes a hash function recommendation). Each client only needs to compute a hash value for each of the n sites, and then pick the largest. This algorithm runs in O(n) time. If the hash function is efficient, the O(n) running time is not a problem, unless n is very large.

Skeleton-based variant for very large n

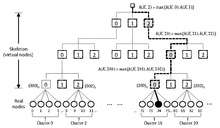

When n is extremely large, the following variant can improve running time.[12][13][14] This approach creates a virtual hierarchical structure (called a skeleton), and achieves O(log n) running time by applying HRW at each level while descending the hierarchy. The idea is to first choose some constant m and organize the n sites into c = ceiling(n/m) clusters C1, = {S1, S2, ... ,Sm}, C2 = {Sm+1, Sm+2, ... ,S2m}, ... Next, build a virtual hierarchy by choosing a constant f and imagining these c clusters placed at the leaves of a tree T of virtual nodes, each with fanout f.

In the accompanying diagram, the cluster size is m = 4, and the skeleton fanout is f = 3. Assuming 108 sites (real nodes) for convenience, we get a three-tier virtual hierarchy. Since f = 3, each virtual node has a natural numbering in octal. Thus, the 27 virtual nodes at the lowest tier would be numbered 000, 001, 002, ..., 221, 222, in octal. (We can, of course, vary the fanout at each level. In that case, each node will be identified with the corresponding mixed-radix number.)

Instead of applying HRW to all 108 real nodes, we can first apply HRW to the 27 lowest-tier virtual nodes, selecting one. We then apply HRW to the four real nodes in its cluster, and choose the winning site. We only need 27 + 4 = 31 hashes, rather than 108. If we apply this method starting one level higher in the hierarchy, we would need 9 + 3 + 4 = 16 hashes to get to the winning site. The figure shows how, if we proceed starting from the root of the skeleton, we may successively choose the virtual nodes (2)3, (20)3, and (200)3, and finally end up with site 74.

We can start at any level in the virtual hierarchy, not just at the root. Starting lower in the hierarchy requires more hashes, but may improve load distribution in the case of failures. Also, the virtual hierarchy need not be stored, but can be created on demand, since the virtual nodes names are simply prefixes of base-f (or mixed-radix) representations. We can easily create appropriately sorted strings from the digits, as required. In the example, we would be working with the strings 0, 1, 2 (at tier 1), 20, 21, 22 (at tier 2), and 200, 201, 202 (at tier 3). Clearly, T has height h = O (log c) = O (log n), since m and f are both constants. The work done at each level is O (1), since f is a constant.

For any given object O, it is clear that the method chooses each cluster, and hence each of the n sites, with equal probability. If the site finally selected is unavailable, we can select a different site within the same cluster, in the usual manner. Alternatively, we could go up one or more tiers in the skeleton and select an alternate from among the sibling virtual nodes at that tier, and once again descend the hierarchy to the real nodes, as above.

The value of m can be chosen based upon such factors as the anticipated failure rate and the degree of load balancing desired. A higher value of m leads to less load skew in the event of failure, at the cost of somewhat higher search overhead.

The choice m = n is equivalent to non-hierarchical rendezvous hashing. In practice, the hash h() is very cheap, so m = n can work quite well unless n is very high.

References

- 1 2 3 4 5 Thaler, David; Chinya Ravishankar. "A Name-Based Mapping Scheme for Rendezvous" (PDF). University of Michigan Technical Report CSE-TR-316-96. Retrieved 2013-09-15.

- 1 2 3 4 Thaler, David; Chinya Ravishankar (February 1998). "Using Name-Based Mapping Schemes to Increase Hit Rates". IEEE/ACM Transactions on Networking. 6 (1): 1–14. doi:10.1109/90.663936.

- ↑ Blazevic, Ljubica. "Distributed Core Multicast (DCM): a routing protocol for many small groups with application to mobile IP telephony". IETF Draft. IETF. Retrieved September 17, 2013.

- ↑ Fenner, B. "Protocol Independent Multicast - Sparse Mode (PIM-SM): Protocol Specification (Revised)". IETF RFC. IETF. Retrieved September 17, 2013.

- ↑ Valloppillil, Vinod; Kenneth Ross. "Cache Array Routing Protocol v1.0". Internet Draft. Retrieved September 15, 2013.

- ↑ "Cache Array Routing Protocol and Microsoft Proxy Server 2.0" (PDF). Microsoft. Retrieved September 15, 2013.

- ↑ Mayank, Anup; Ravishankar, Chinya (2006). "Supporting mobile device communications in the presence of broadcast servers" (PDF). International Journal of Sensor Networks. 2 (1/2): 9–16.

- ↑ Guo, Danhua; Bhuyan, Laxmi; Liu, Bin (October 2012). "An efficient parallelized L7-filter design for multicore servers". IEEE/ACM Transactions on Networking (TON). 20 (5): 1426–1439.

- 1 2 Wang, Peng; Ravishankar, Chinya (2015). "Key Foisting and Key Stealing Attacks in Sensor Networks'" (PDF). International Journal of Sensor Networks.

- ↑ Mukherjee, Niloy; et al. (August 2015). "Distributed Architecture of Oracle Database In-memory". Proceedings of the VLDB Endowment. 8 (12): 1630–1641.

- ↑ http://www.allthingsdistributed.com/files/amazon-dynamo-sosp2007.pdf

- ↑ Yao, Zizhen; Ravishankar, Chinya; Tripathi, Satish (May 13, 2001). Hash-Based Virtual Hierarchies for Caching in Hybrid Content-Delivery Networks (PDF). Riverside, CA: CSE Department, University of California, Riverside. Retrieved 15 November 2015.

- ↑ Wang, Wei; Chinya Ravishankar (January 2009). "Hash-Based Virtual Hierarchies for Scalable Location Service in Mobile Ad-hoc Networks". Mobile Networks and Applications. 14 (5): 625–637. doi:10.1007/s11036-008-0144-3.

- ↑ Mayank, Anup; Phatak, Trivikram; Ravishankar, Chinya (2006), Decentralized Hash-Based Coordination of Distributed Multimedia Caches (PDF), Proc. 5th IEEE International Conference on Networking (ICN'06), Mauritius: IEEE